Visual Language Maps For Robot Navigation – which are then fed into one large language model that achieves all parts of the multistep navigation task. Rather than encoding visual features from images of a robot’s surroundings as visual . MIT researchers have created an AI navigation method that utilizes language to guide robots, employing a large language model to process textual descriptions of visual scenes and generate navigation .

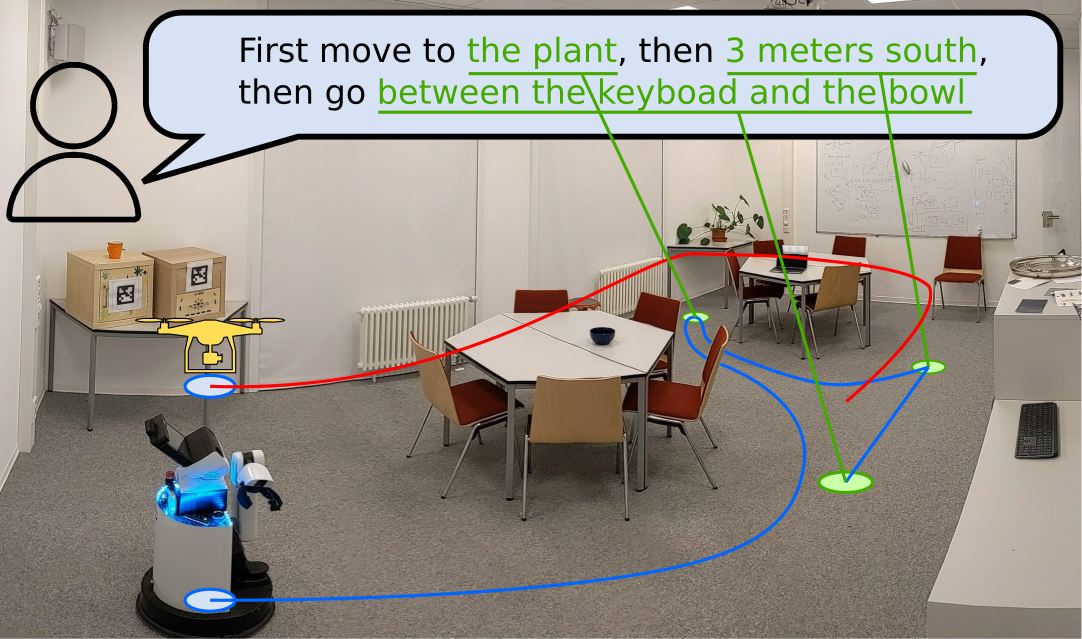

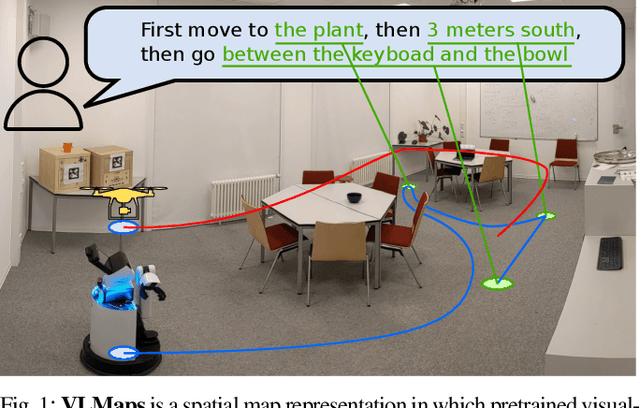

Visual Language Maps For Robot Navigation

Source : vlmaps.github.io

Visual Language Maps for Robot Navigation | Oier Mees

Source : www.oiermees.com

Visual language maps for robot navigation

Source : research.google

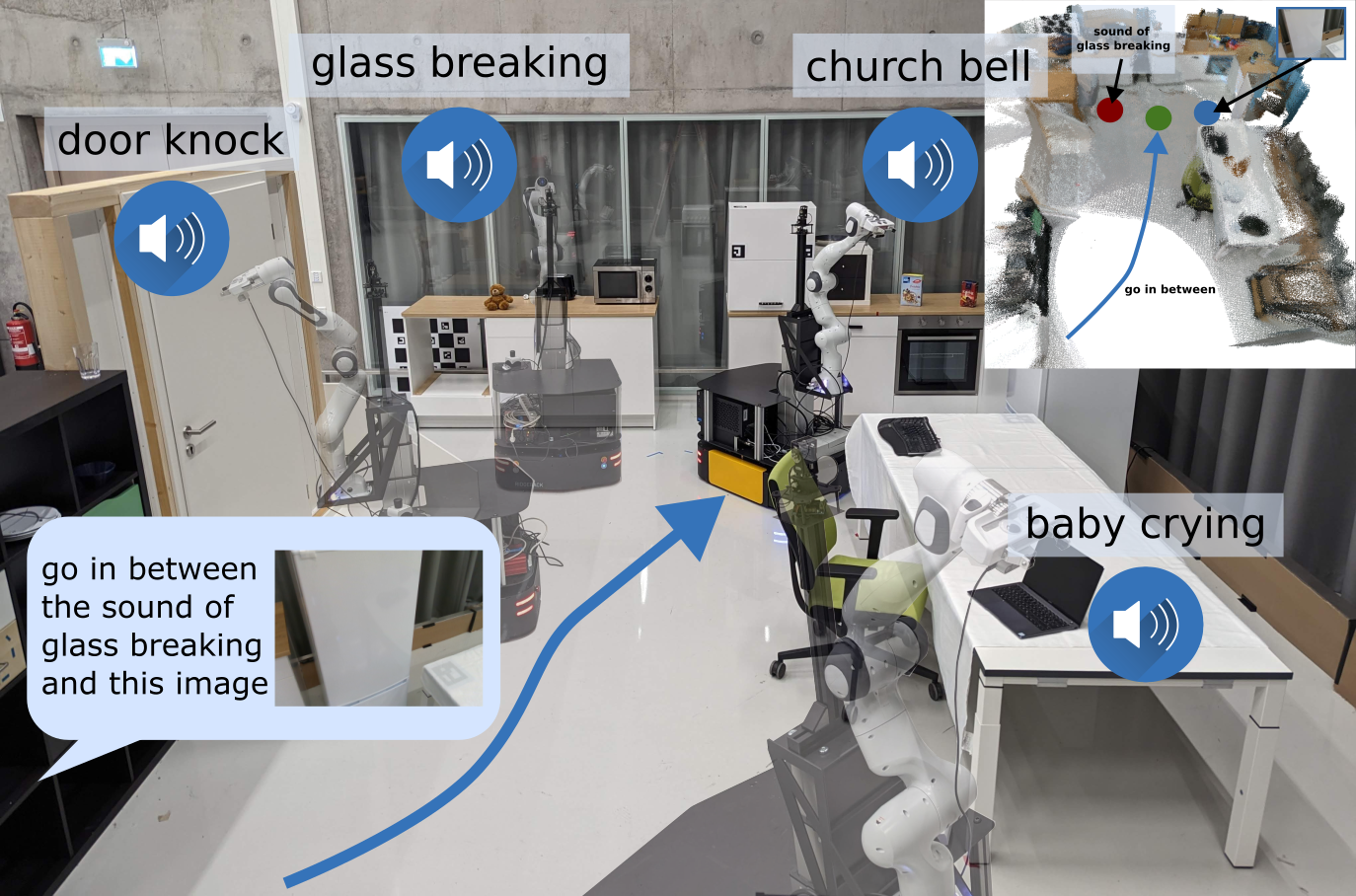

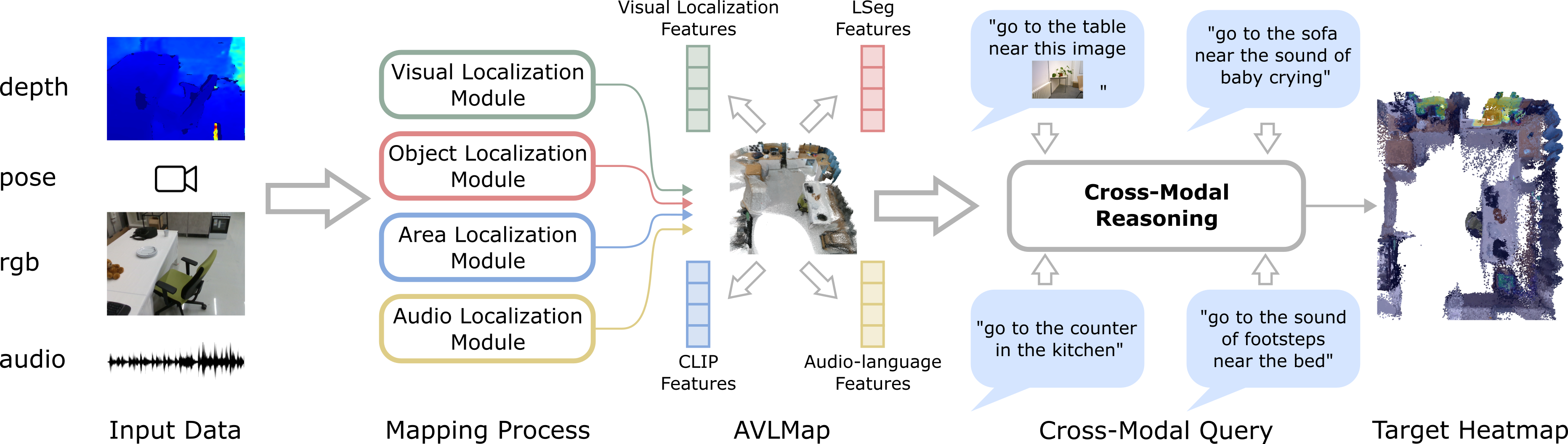

Audio Visual Language Maps for Robot Navigation

Source : avlmaps.github.io

Audio Visual Language Maps for Robot Navigation | Oier Mees

Source : www.oiermees.com

GitHub vlmaps/vlmaps: [ICRA2023] Implementation of Visual

Source : github.com

Wolfram Burgard on LinkedIn: Visual Language Maps for Robot Navigation

Source : www.linkedin.com

GitHub vlmaps/vlmaps: [ICRA2023] Implementation of Visual

Source : github.com

Visual Language Maps for Robot Navigation

Source : www.catalyzex.com

Audio Visual Language Maps for Robot Navigation

Source : avlmaps.github.io

Visual Language Maps For Robot Navigation Visual Language Maps for Robot Navigation: Applications include natural language interactions, robot learning, no-code programming and even design. Google’s DeepMind Robotics team this week is showcasing another potential sweet spot between . Generative AI has already shown a lot of promise in robots. Applications include natural language interactions spot between the two disciplines: navigation. In a paper titled “Mobility .